by Xukyo | 29 Feb 2024 | Tutorials

In this tutorial, we’ll look at how to perform object recognition with Yolo and OpenCV, using a neural network pre-trained with deep learning. We saw in a previous tutorial how to recognize simple shapes using computer vision. This method only works for certain...

by Xukyo | 24 Feb 2024 | Tutorials

In this tutorial, we’ll look at how to recognize text from images using Python and Tesseract. Tesseract is a tool for recognizing characters, and therefore text, contained in an image (OCR, Optical Character Recognition). Installing Tesseract Under Linux To...

by Xukyo | 13 Feb 2024 | Tutorials

For certain applications, you may find it useful to embed OpenCV in a PyQt interface. In this tutorial, we’ll look at how to correctly integrate and manage a video captured by OpenCV in a PyQt application. N.B.: We use Pyside, but conversion to PyQt is quite...

by Xukyo | 28 Nov 2023 | Tutorials

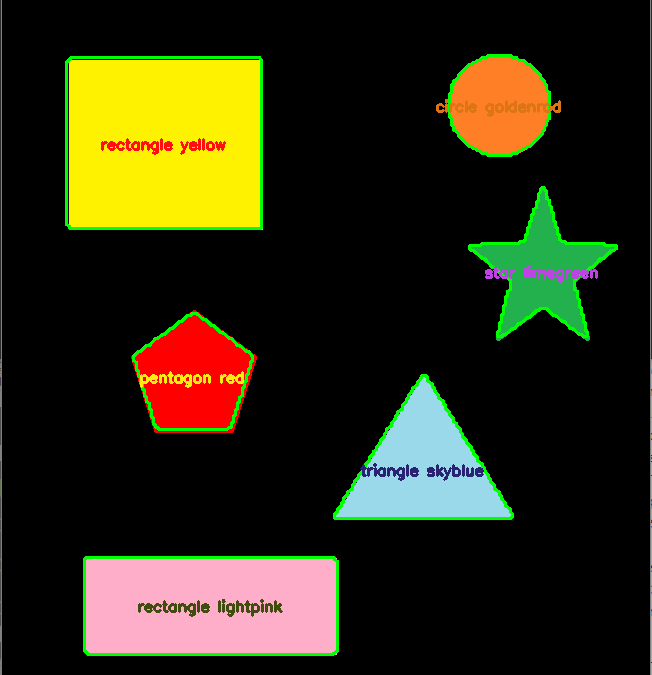

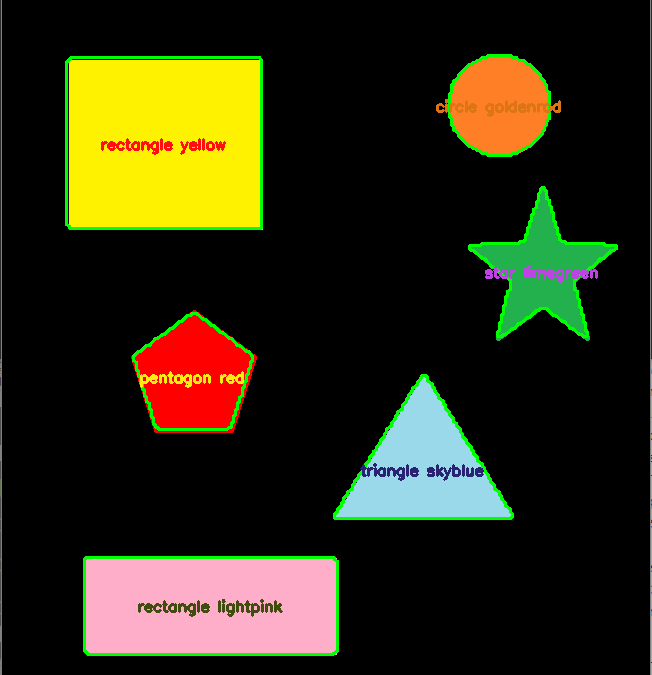

, The OpenCV library is used for image processing, in particular shape and color recognition. The library has acquisition functions and image processing algorithms that make image recognition fairly straightforward, without the need for artificial intelligence. This...

by Xukyo | 1 Aug 2023 | Tutorials

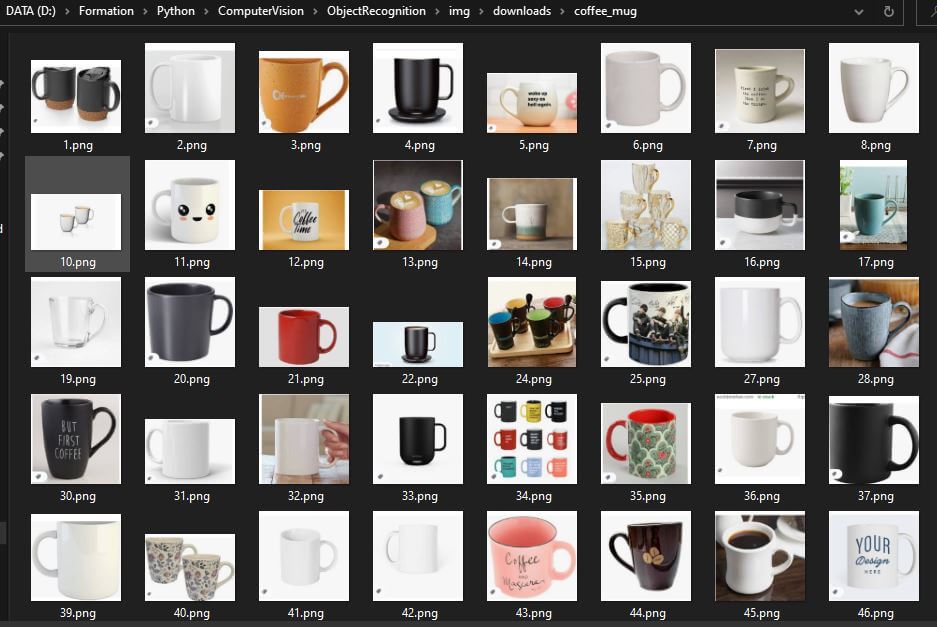

, , To train a neural network in object detection and recognition, you need an image bank to work with. We’ll see how to download a large number of images from Google using Python. To train a neural network, you need a large amount of data. The more data, the...