To improve performance on the Raspberry Pi, you can use the C++ language and optimized libraries to accelerate the computation speed of object detection models. This is what TensorFlow Lite offers.

A good place to start is QEngineering.

Hardware

- Raspberry Pi 4

- Screen+mouse+keyboard

- SD card with OS Raspbian 64bits

Configuration

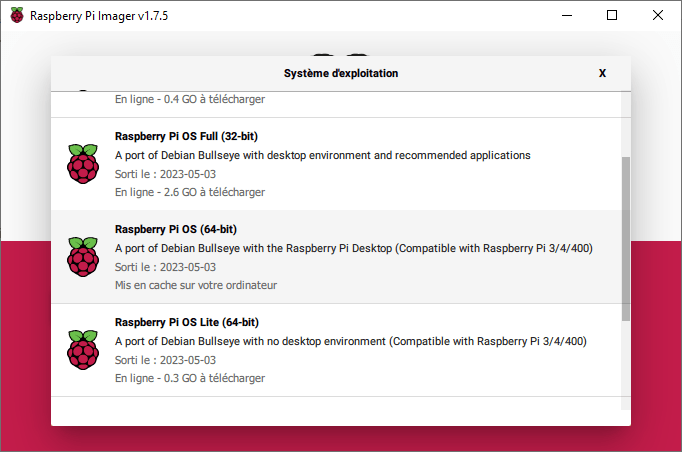

For best performance, you’ll need to install the 64-bit version of Raspberry Pi OS. This version is available in the Raspberry Pi Imager software in the Raspberry Pi OS (others) menu.

Installing Code::Blocks

Code blocks IDE is a program like Thonny or Geany that lets you compile and run code written in C

sudo apt-get install codeblocks

Installing OpenCV for Cpp

To install the Cpp version of OpenCV, we use the apt-get tool

sudo apt-get install libopencv-dev

Installing TensorFlow Lite on Raspberry Pi OS 64bits

For best performance on Raspberry Pi, one solution is to use the Lite version of TensorFlow. Please follow the procedure described on the QEngineering website to install TensorFlow Lite on Raspberry Pi OS 64bits.

N.B.: don’t forget to reboot the Raspberry Pi after installing tensorflow.

Object detection code

You can download the code and project on QEngineering’s Github

The model is built from the tflite file. In this project, we use MobileNet V1

std::unique_ptr<tflite::FlatBufferModel> model = tflite::FlatBufferModel::BuildFromFile("detect.tflite");

Then open a video stream (or a video or image file).

VideoCapture cap(0);

We then run the model on each image

interpreter->Invoke();

Finally, we plot the detection results on the image and display them.

#include <stdio.h>

#include <opencv2/opencv.hpp>

#include <opencv2/dnn.hpp>

#include <opencv2/highgui.hpp>

#include <fstream>

#include <iostream>

#include <opencv2/core/ocl.hpp>

#include "tensorflow/lite/interpreter.h"

#include "tensorflow/lite/kernels/register.h"

#include "tensorflow/lite/string_util.h"

#include "tensorflow/lite/model.h"

#include <cmath>

using namespace cv;

using namespace std;

const size_t width = 300;

const size_t height = 300;

std::vector<std::string> Labels;

std::unique_ptr<tflite::Interpreter> interpreter;

static bool getFileContent(std::string fileName)

{

// Open the File

std::ifstream in(fileName.c_str());

// Check if object is valid

if(!in.is_open()) return false;

std::string str;

// Read the next line from File untill it reaches the end.

while (std::getline(in, str))

{

// Line contains string of length > 0 then save it in vector

if(str.size()>0) Labels.push_back(str);

}

// Close The File

in.close();

return true;

}

void detect_from_video(Mat &src)

{

Mat image;

int cam_width =src.cols;

int cam_height=src.rows;

// copy image to input as input tensor

cv::resize(src, image, Size(300,300));

memcpy(interpreter->typed_input_tensor<uchar>(0), image.data, image.total() * image.elemSize());

interpreter->SetAllowFp16PrecisionForFp32(true);

interpreter->SetNumThreads(4); //quad core

// cout << "tensors size: " << interpreter->tensors_size() << "\n";

// cout << "nodes size: " << interpreter->nodes_size() << "\n";

// cout << "inputs: " << interpreter->inputs().size() << "\n";

// cout << "input(0) name: " << interpreter->GetInputName(0) << "\n";

// cout << "outputs: " << interpreter->outputs().size() << "\n";

interpreter->Invoke(); // run your model

const float* detection_locations = interpreter->tensor(interpreter->outputs()[0])->data.f;

const float* detection_classes=interpreter->tensor(interpreter->outputs()[1])->data.f;

const float* detection_scores = interpreter->tensor(interpreter->outputs()[2])->data.f;

const int num_detections = *interpreter->tensor(interpreter->outputs()[3])->data.f;

//there are ALWAYS 10 detections no matter how many objects are detectable

// cout << "number of detections: " << num_detections << "\n";

const float confidence_threshold = 0.5;

for(int i = 0; i < num_detections; i++){

if(detection_scores[i] > confidence_threshold){

int det_index = (int)detection_classes[i]+1;

float y1=detection_locations[4*i ]*cam_height;

float x1=detection_locations[4*i+1]*cam_width;

float y2=detection_locations[4*i+2]*cam_height;

float x2=detection_locations[4*i+3]*cam_width;

Rect rec((int)x1, (int)y1, (int)(x2 - x1), (int)(y2 - y1));

rectangle(src,rec, Scalar(0, 0, 255), 1, 8, 0);

putText(src, format("%s", Labels[det_index].c_str()), Point(x1, y1-5) ,FONT_HERSHEY_SIMPLEX,0.5, Scalar(0, 0, 255), 1, 8, 0);

}

}

}

int main(int argc,char ** argv)

{

float f;

float FPS[16];

int i, Fcnt=0;

Mat frame;

chrono::steady_clock::time_point Tbegin, Tend;

for(i=0;i<16;i++) FPS[i]=0.0;

// Load model

std::unique_ptr<tflite::FlatBufferModel> model = tflite::FlatBufferModel::BuildFromFile("detect.tflite");

// Build the interpreter

tflite::ops::builtin::BuiltinOpResolver resolver;

tflite::InterpreterBuilder(*model.get(), resolver)(&interpreter);

interpreter->AllocateTensors();

// Get the names

bool result = getFileContent("COCO_labels.txt");

if(!result)

{

cout << "loading labels failed";

exit(-1);

}

VideoCapture cap("James.mp4");

if (!cap.isOpened()) {

cerr << "ERROR: Unable to open the camera" << endl;

return 0;

}

cout << "Start grabbing, press ESC on Live window to terminate" << endl;

while(1){

// frame=imread("Traffic.jpg"); //need to refresh frame before dnn class detection

cap >> frame;

if (frame.empty()) {

cerr << "ERROR: Unable to grab from the camera" << endl;

break;

}

Tbegin = chrono::steady_clock::now();

detect_from_video(frame);

Tend = chrono::steady_clock::now();

//calculate frame rate

f = chrono::duration_cast <chrono::milliseconds> (Tend - Tbegin).count();

if(f>0.0) FPS[((Fcnt++)&0x0F)]=1000.0/f;

for(f=0.0, i=0;i<16;i++){ f+=FPS[i]; }

putText(frame, format("FPS %0.2f", f/16),Point(10,20),FONT_HERSHEY_SIMPLEX,0.6, Scalar(0, 0, 255));

//show output

// cout << "FPS" << f/16 << endl;

imshow("RPi 4 - 1,9 GHz - 2 Mb RAM", frame);

char esc = waitKey(5);

if(esc == 27) break;

}

cout << "Closing the camera" << endl;

destroyAllWindows();

cout << "Bye!" << endl;

return 0;

}

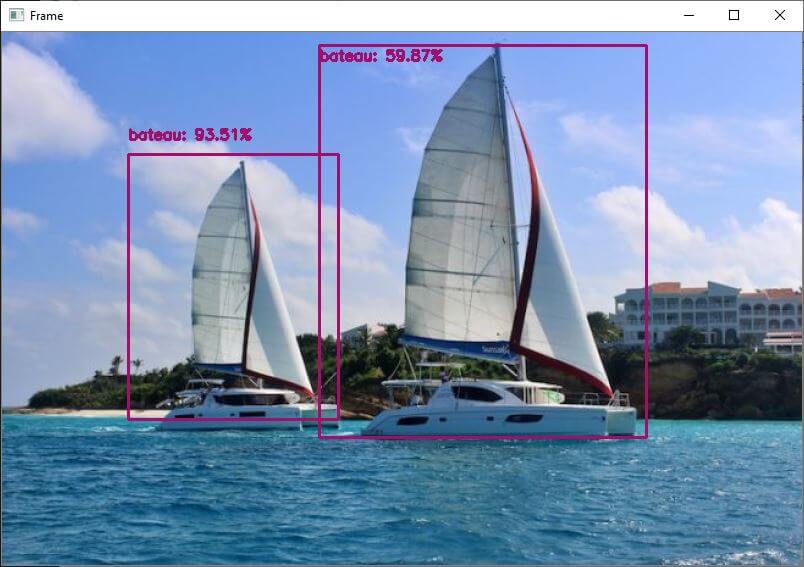

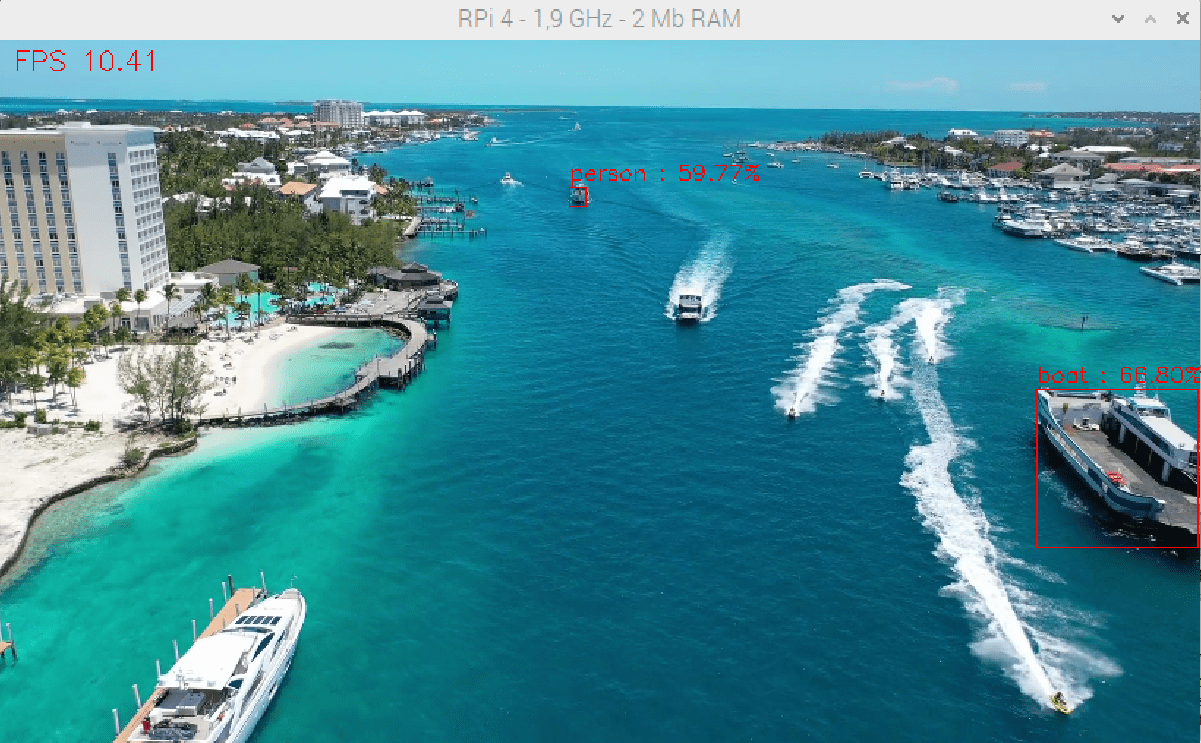

The results of this code show object detection at speeds between 10 and 20 FPS, but with low accuracy in this example.

A study with other examples and models needs to be carried out, but this project shows the interest of C++ and Tensorflow Lite for object detection on Raspberry Pi.