by Xukyo | 29 Mar 2024 | Tutorials

In this tutorial, we’ll look at how to give your Android device a voice using a Text to Speech (TTS) library. Whether you’re developing applications for the visually impaired, or just want to liven up your Android system, giving your project a voice can be...

by Xukyo | 19 Mar 2024 | Tutorials

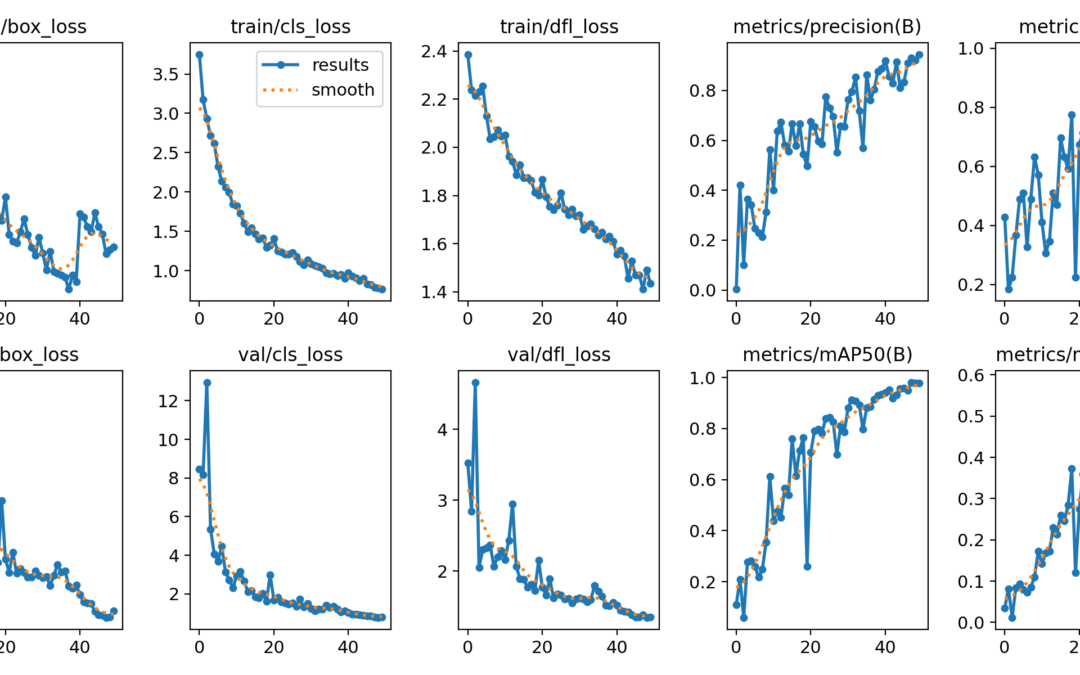

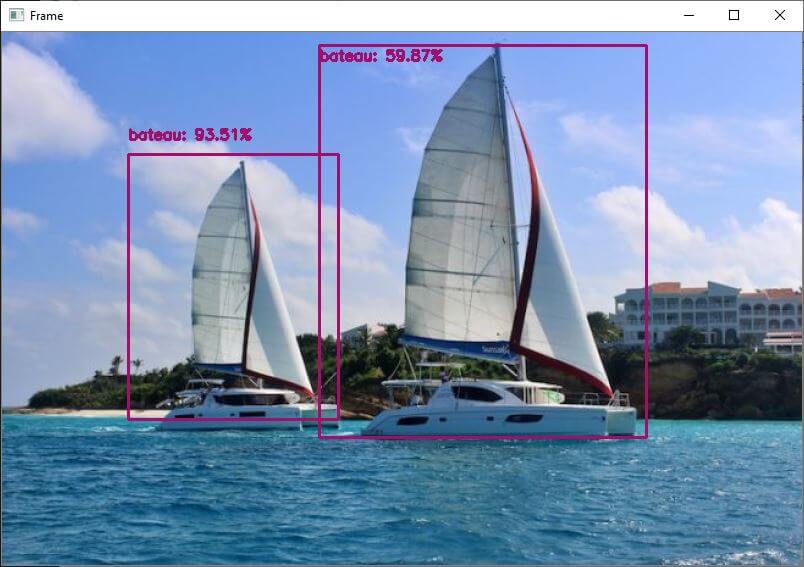

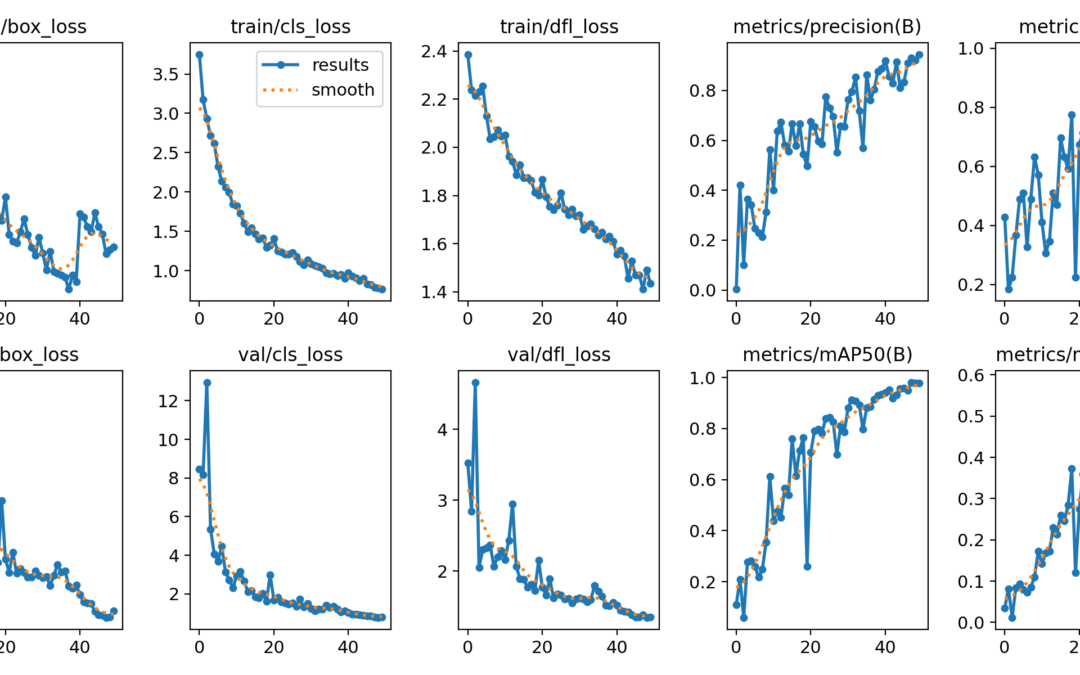

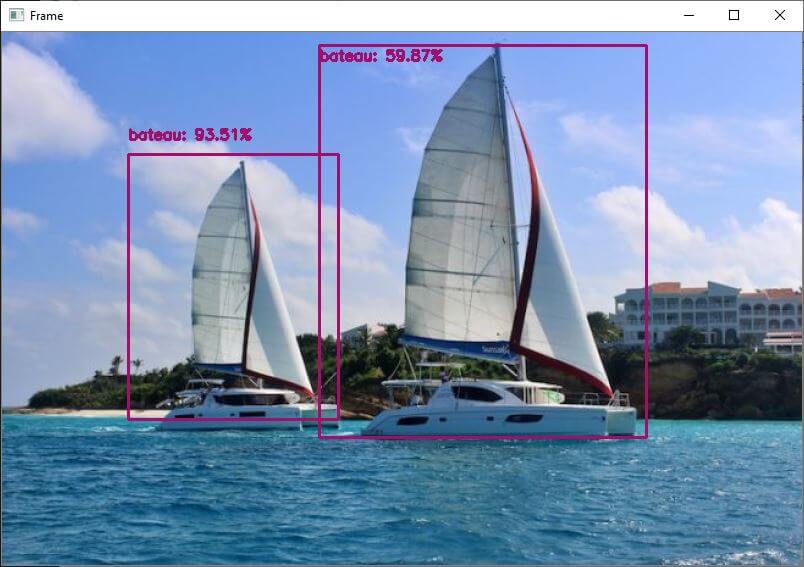

In this tutorial, we’ll look at how to train a YOLO model for object recognition on specific data. The difficulty lies in creating the image bank that will be used for training. Hardware A computer with a Python3 installation A camera Principle We saw in a...

by Xukyo | 4 Mar 2024 | Tutorials

To improve performance on the Raspberry Pi, you can use the C++ language and optimized libraries to accelerate the computation speed of object detection models. This is what TensorFlow Lite offers. A good place to start is QEngineering. Hardware Raspberry Pi 4...

by Xukyo | 3 Mar 2024 | Tutorials

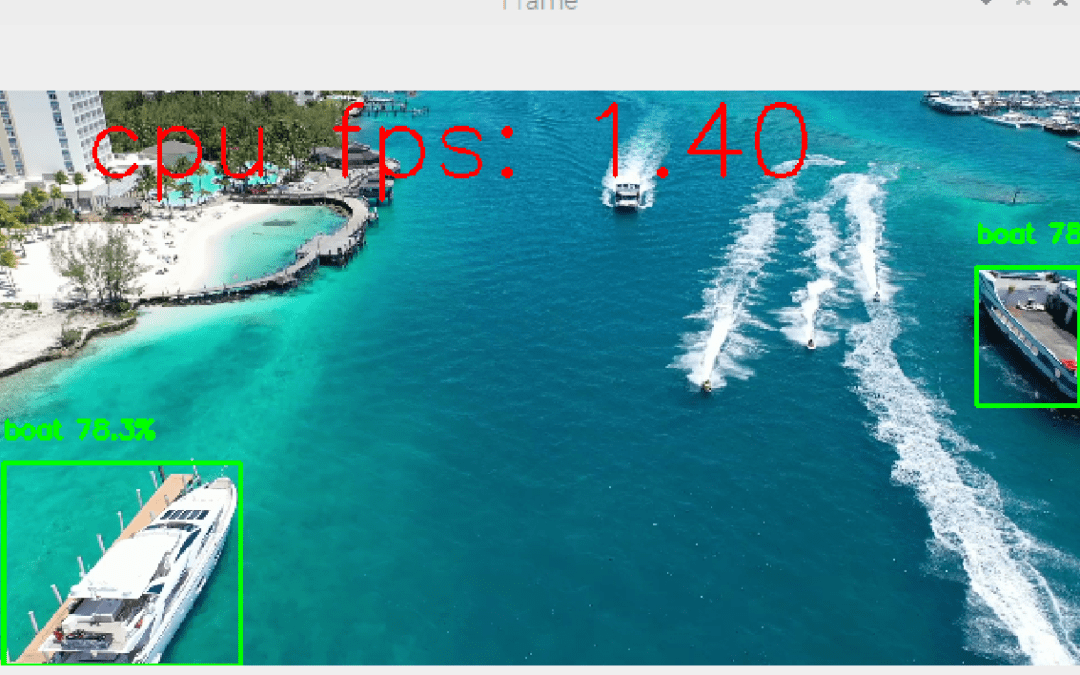

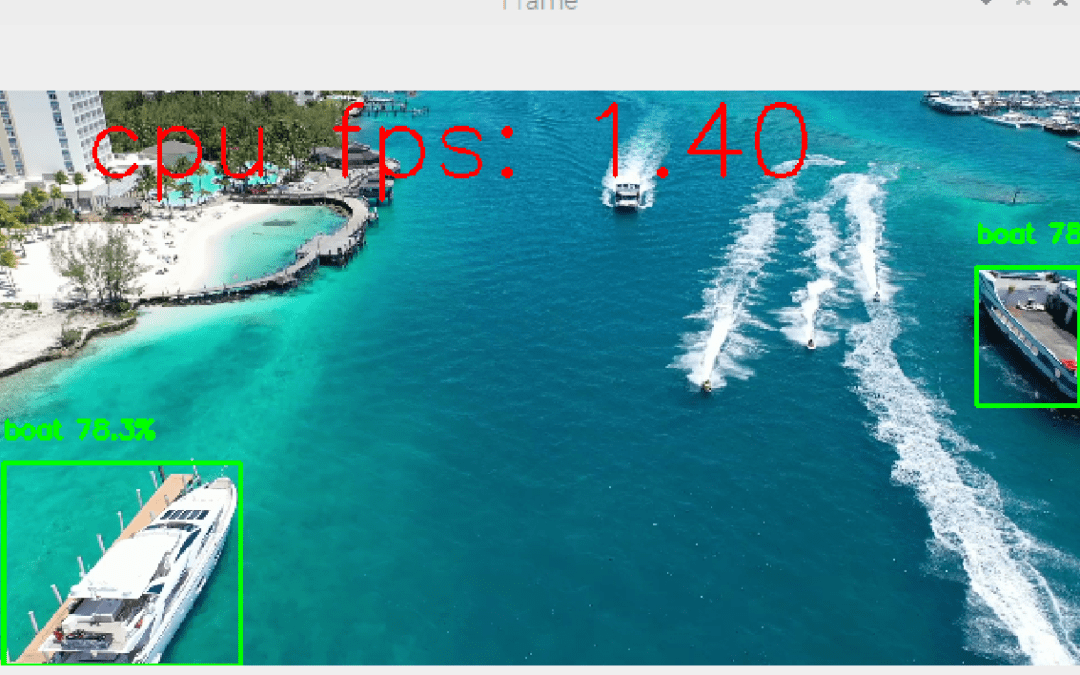

It’s possible to embed object recognition models like Yolo on a Raspberry Pi. Of course, because of its low performance compared with computers, performance is lower in terms of real-time recognition. However, it is perfectly possible to develop algorithms using...

by Xukyo | 1 Mar 2024 | Tutorials

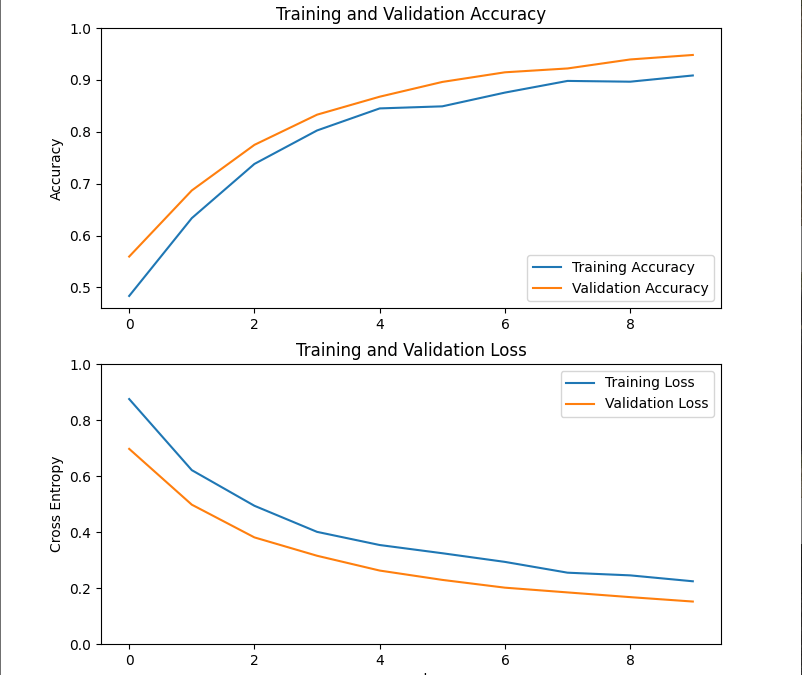

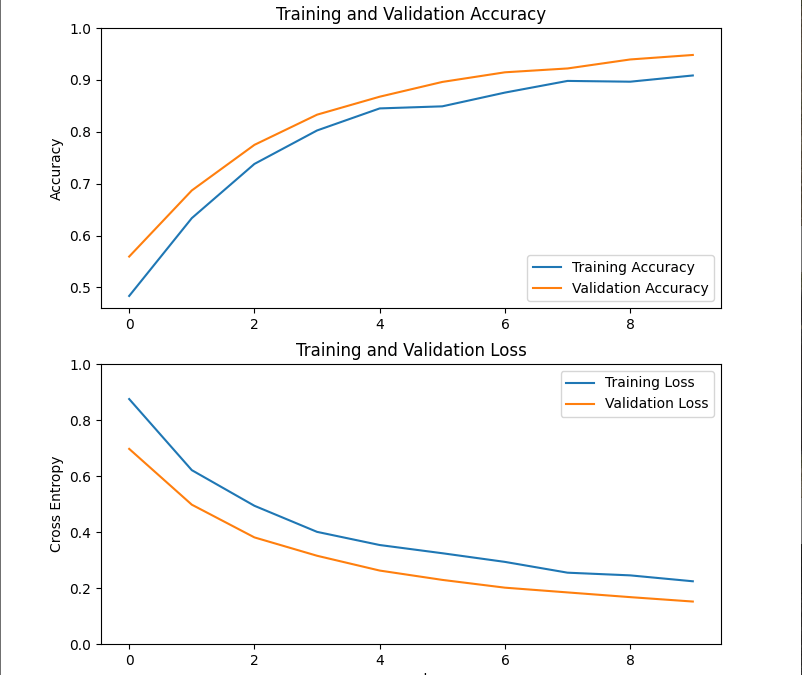

In this tutorial, we’ll train a MobileNetV2 TensorFlow model with Keras so that it can be applied to our problem. We’ll then be able to use it in real time to classify new images. For this tutorial, we assume that you have followed the previous tutorials:...